I recently completed my PhD at UNSW Sydney, where I was advised by Dr. Lina Yao and Dr. Dong Gong.

Prior to that, I completed my Erasmus Mundus Joint Master's Degree in Advanced Systems Dependability from the University of St Andrews, UK and l'Université de Lorraine, France. During my master's, I interned with the MULTISPEECH group at Inria Nancy where I worked with Dr. Emmanuel Vincent.

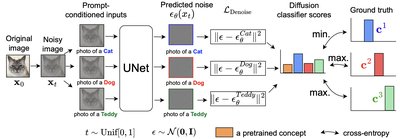

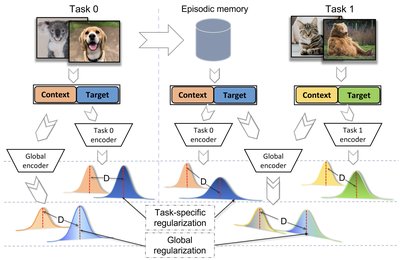

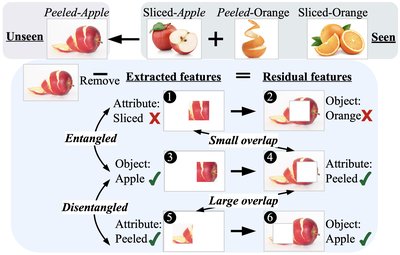

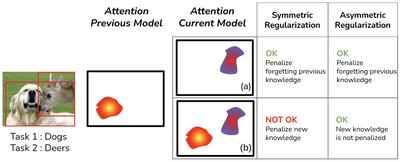

My current research interests span multimodal generative models, agentic frameworks, and continual learning.